Why authentication is a challenge for AI agents

AI Agents are a rapidly evolving technology in the AI space. The introduction of LLMs and the ability for LLMs to interact with other software autonomously has paved the way for a new wave of technological innovation. This is an exciting development but it needs appropriate guardrails to ensure that an agent is really enacting your wishes and not sending rogue emails on your behalf to your entire address book. This is the first of a 2-part series that discusses some of the challenges of appropriately authenticating and authorizing agents so they can safely fulfill requests.

The misaligned incentives of agents and authentication

Authentication is one of the most well-understood guardrails of the internet. These days, we take for granted that you cannot easily send an email on someone else’s behalf. In the earliest days of the internet, people wrote basic bots to brute force attack usernames and passwords. But today, through widely deployed methods like OAuth combined with captcha, 2FA, and IP checks, authentication ensures that the appropriate human is present and hard to impersonate.

This presents a problem for agents. Their inherent value proposition is to act autonomously, whereas authentication has evolved precisely to ensure that the appropriate human is present for certain tasks.

Most agentic systems solve this today by pre-authenticating and authorizing the agent for any actions that it might want to take. The problem is that this pre-emptive access is far too broad and essentially removes one of the best safety guardrails we already have. You can replace it with a human-in-the-loop check instead, which many agentic systems have, but this is a bit like giving a burglar the keys to the castle but putting an electric fence around the perimeter – we’re essentially having to remove the authentication barrier and replace it with less sophisticated systems.

Conversely, it’s also fundamentally limiting the potential of our agents – ideally, you want a system that grants the agent a minimal set of privileges so that it can achieve its tasks. Pre-authorization means that, unless your end user is happy to sit and grant a bunch of authorizations to agents that they may not use, you’ll end up inadvertently limiting how many tools and systems your agent can access. Naive pre-authentication makes it hard to strike the balance between overgranting and undergranting and so ends up as both a limiting factor and also a far too permissive guardrail for agents.

We’ve been trying to think about how just-in-time authorization can work for agents. How can we create an agentic system that enables agents to get the authorization they require only when it is clear that they require it?

An architecture for just-in-time agent authentication and authorization

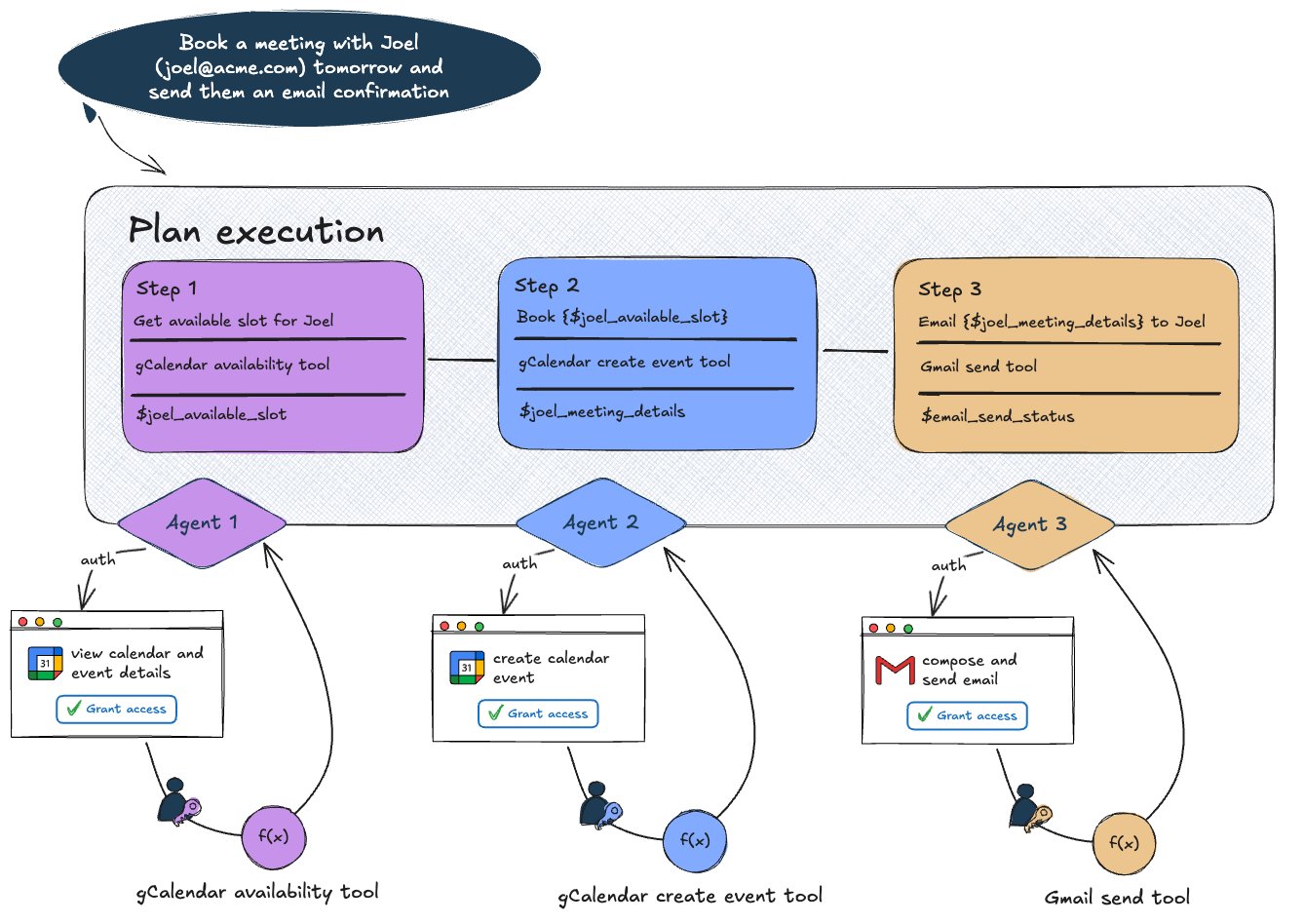

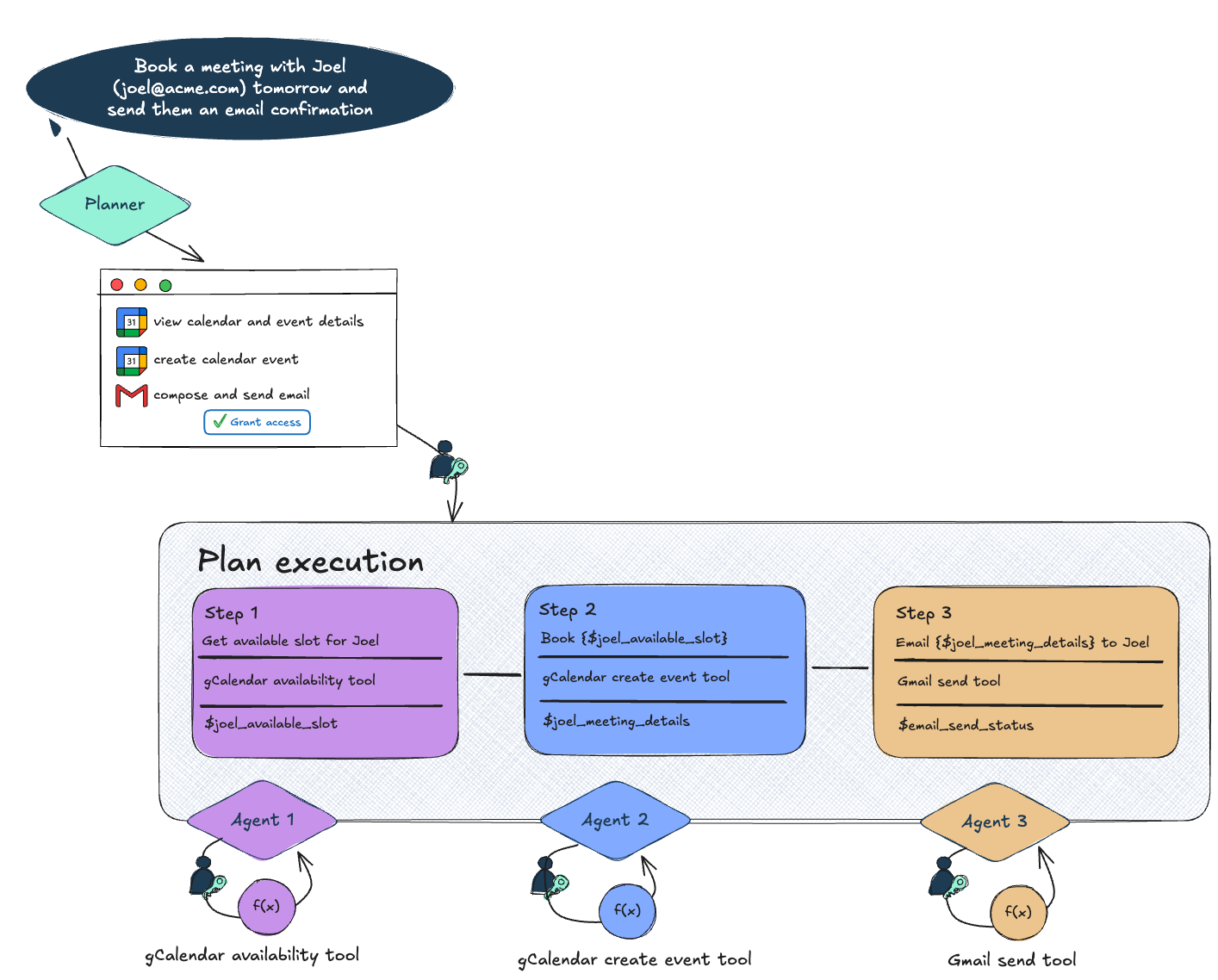

Just-in-time authorization means that an agent has the ability to authorize itself only at the point where it is very likely that they will 1/ need that authorization and 2/ that they are clear what they will use it for. If we think of agent execution as a graph of execution nodes against systems that might require authentication, there are 2 ways we can solve this problem:

1/ Authorization at the point of execution

In this case, the agent must pause itself to retrieve the authorization it requires from the user. For this to be useful in an autonomous agent scenario, this means that any state up to that point must be saved so that it can be resumed once the authentication action has been completed.

The advantage of this is that the agent can request exactly the authentication and authorization that it needs for the task it’s trying to execute. The disadvantage is that your agent can only proceed so far autonomously and you risk it getting stuck repeatedly every time it hits an authentication.

2/ Scoped authorization based on an articulated plan

Some agentic systems these days rely on some amount of chain of thought reasoning and pre-planning. The idea with this kind of authentication is to try to pre-process the articulated plan to identify authentication requirements as early as possible or to group them together to minimize round trips back to the user. This has become a core part of how we have designed Portia AI – the plan and what the agent is attempting to do should always be clear to the human. It also allows us to scope the authorization provided to the agent accurately.

However, agentic systems are increasingly evolving to have adaptive planning such that the authentication requirements may change as the agent discovers more information about its goal or backtracks from a particular route. An adaptation of this is to try to do probabilistic pre-authentication based on some combination of the articulated plan or the domain that the agent is operating in. Ultimately however, this is just an optimization on top of pre-authentication described in the first section and so has the same fundamental limitations and concerns.

So what is the right solution? Having the ability to authenticate at the point of execution is a fundamental building block for effective authentication and authorization. With this enabled, you can then build probabilistic optimization systems on top of it which are optimizing for the agent progressing as far as it can without human interruption, but which can recover in the case that they diverge from the probabilistic outcome.

In part 2, we will look at how you can build this just-in-time authentication into agentic systems and how we’ve solved it at Portia AI (↗).