More features for your production agent … and a fundraising announcement

We came out of stealth a few weeks ago. Since then we’ve been working with our first few design partners on developing their production agents and have been heads down building out our SDK to solve their problems. To equip us with enough runway to grow, we’ve also been lucky enough to raise £4.4 million from some of the best investors we could ever hope for: General Catalyst (lead), First Minute Capital, Stem AI and some outstanding angel investors 🚀

In this post we want to give you a sense of what’s coming over the next couple of months.

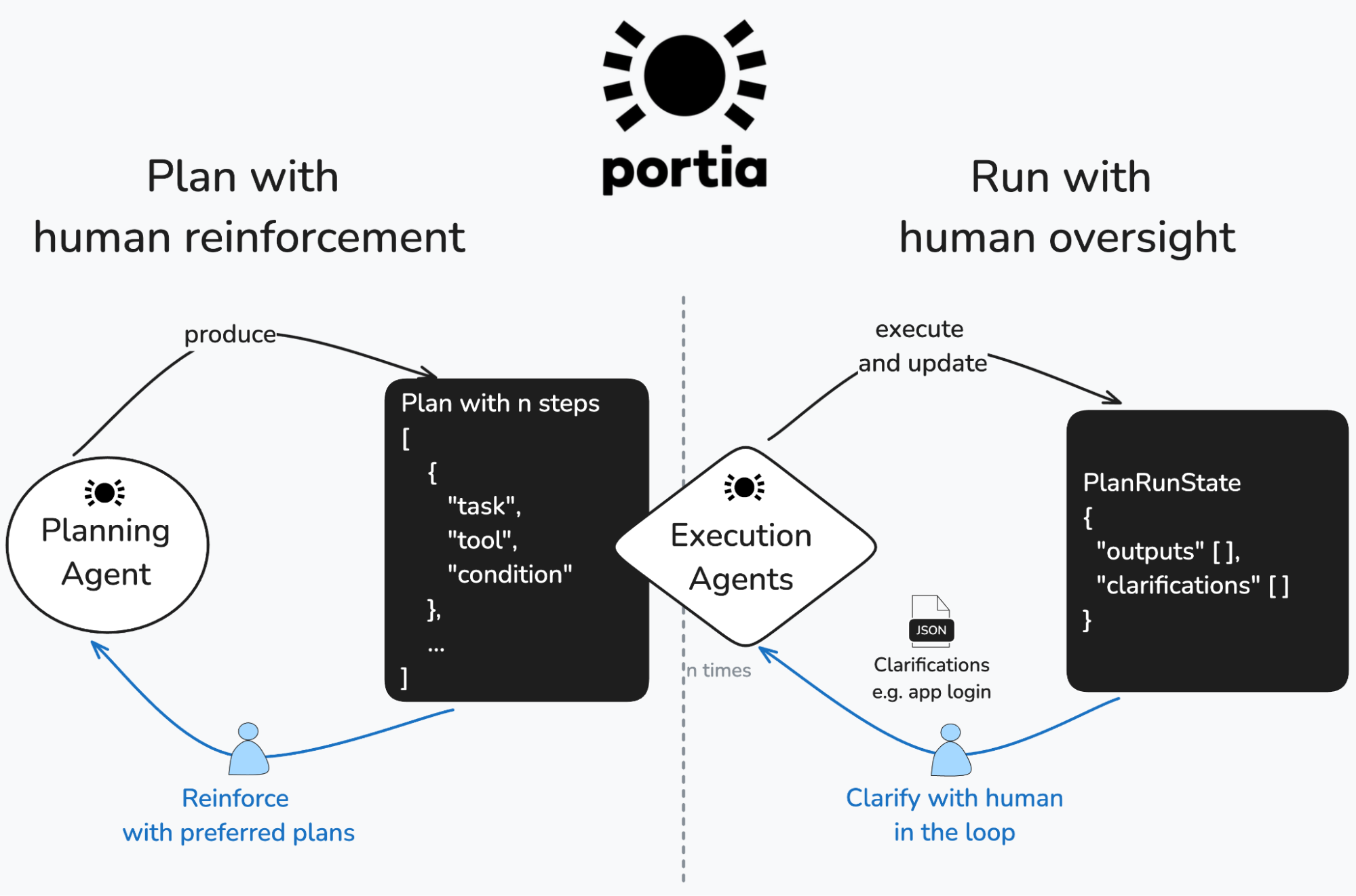

Portia AI is an open source SDK with a cloud component, focused on making it easy for developers to build agents in production. Our three pillars are predictability, controllability and authentication. What does this even mean and why does it matter?

- Predictability: AI agents are attractive because they leverage LLM reasoning to offer a degree of autonomous decision making. Yet many pilot projects have fallen short of moving to production because they lack preemptive visibility into agents behavior. With our Planning agent, developers and / or their end users can pre-express and iterate on the intended course of action of the LLM (Plan) before execution begins.

- Controllability: Many companies we spoke to are concerned that existing options do not offer the ability to monitor agents’ progress or intervene when needed. These limitations are especially critical in regulated industries such as Financial Services, or in end-user-facing applications where compliance, auditability, and trust are non-negotiable. Portia’s Execution agents update the plan run state (PlanRunState) as they go and are able to pause execution to solicit human input in a structured interaction called a Clarification.

- Authentication: Users want to securely authenticate agents into their applications and confine them to a specific scope. We offer a cloud hosted catalogue of tools with built-in authentication. Get the full story from our recent blog post.

Teach our Planning agent new things 🧠

Our early adopters already love that they can supply example plans to the Planning agent. This is a tried and tested approach known as “few-shot prompting”. This week, we released a feature that allows developers to simply “like” plans in the Portia dashboard and then we do the rest. Our Planning agent will retrieve the most relevant “liked” plans from Portia Cloud based on the user prompt and load those in as guidance for the agent. We found that the Planning agent is able to reliably adapt complex plans from previously completed tasks to a new task, even when the user only provides a high level request (e.g. “Retrieve additional data to complete this supplier’s KYB missing information”). We found that Portia was able to produce an 8-step and even a 16-step data collection plan with 100% reliability using user-led learning. Previously this would have been impossible to produce those without extensive prompt engineering.

We will be sharing a step-by-step cookbook shortly if you’re curious to get hands-on with this feature. Make sure you’re signed up to our Discord or LinkedIn channel so you don’t miss it.

Our current design partners have also shared that they may want access to different variants of our Planning agent, with a live A/B testing ability to select the best suited plan for the objective at hand. For example, the Planning agent underpinning some of their more generalised agent use cases should be optimised to handle very large tool sets (~500 tools) while another should be optimised for planning large, multi-step data processing tasks. With a smaller local model deployment, we have been able to reduce tool selection errors by 50% using this approach and are open to trialling this with more partners. Do give us a shout with this contact form to learn more.

We’re even more showtime ready 🕺🏼

We’re making it simpler than ever to deploy the Portia SDK for complex tasks in any production environment. We now support all the commonly used models including OpenAI, Anthropic, Mistral, Gemini, Azure OpenAI and Bedrock. You can also wire up your own LLM instance into Portia AI so you can use your preferred local model in your own private deployment environment, such as Llama or DeepSeek. In Q2 we are introducing the ability to handle very large inputs (e.g. large pdf files, books etc) as well as a context-aware approach to handling the mess of API paginations. Stay tuned for more on this (Discord, LinkedIn).

Elegant auth UX for web agents 🔜

Using our clarification construct to handle human:agent interfaces, we are releasing a headless browser agent that can handle seamless handovers to humans during a session whenever a login is needed, before resuming its task. With our solution, end users will be able to enter their login details directly into the website within the browser session: they will never be compelled to share them with an intermediary party. Be the first to get your hands on this one! (Discord, LinkedIn).

If you’re looking to build agents in production that behave reliably and can be steered, please give us a whirl and share your thoughts. And if you need a white glove partner to help you deploy them, get in touch with us using this form. Our SDK is available (give us a star ⭐ ️), you can get hands-on with some examples in our examples repo or check out this short code-along intro video on our YouTube channel.