A deep dive into our “User Led Learning” feature

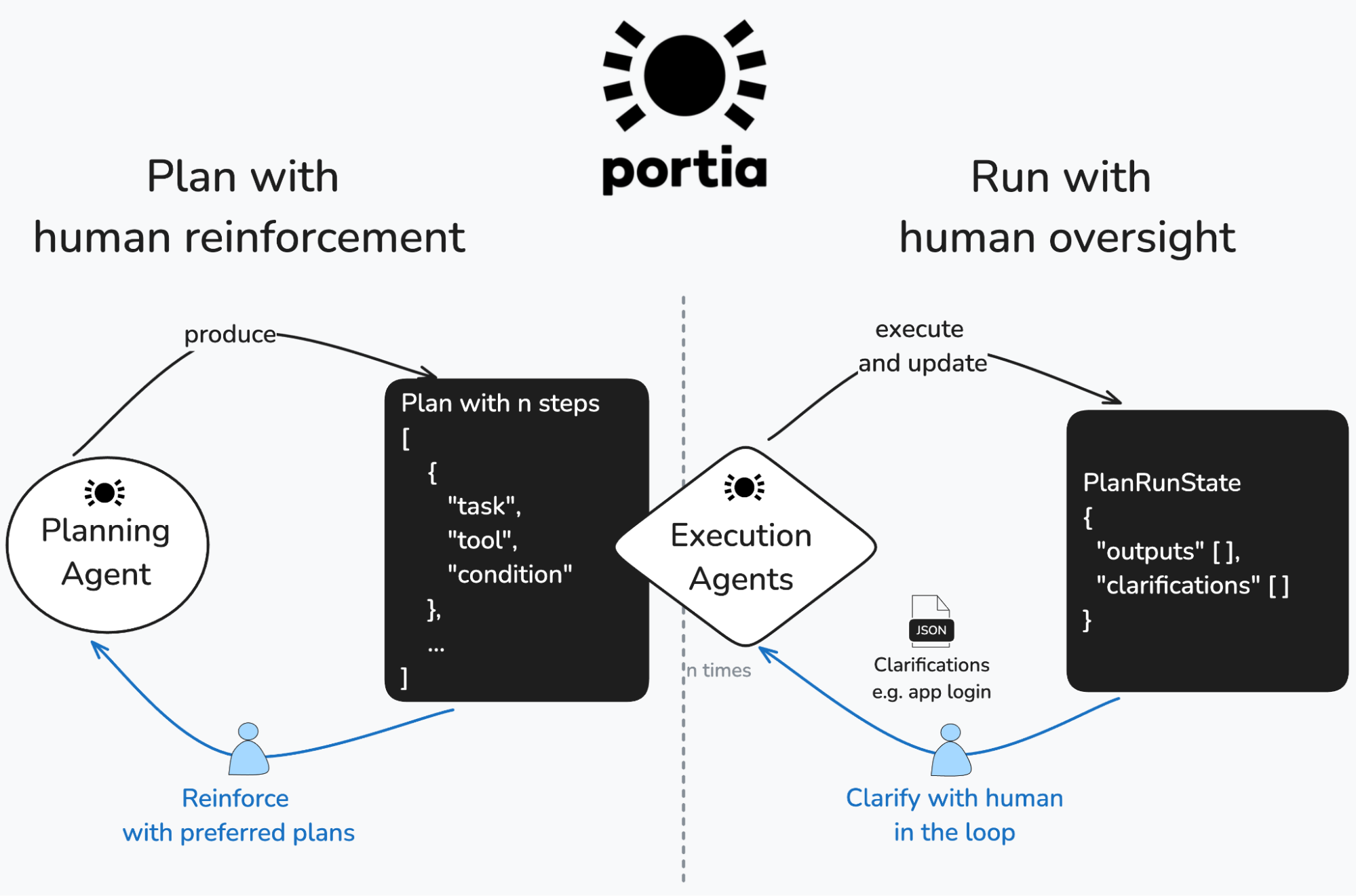

At Portia, we believe building agents for production means balancing AI autonomy with human control – something we call the ‘spectrum of autonomy’. We have previously seen how clarifications can be used during plan runs to handle the human:agent interface. With our new User Led Learning feature, we’re bringing this level of feedback into the planning process as well. Developers now have a powerful way to shape the Planning agent’s behavior—without rewriting prompts or tweaking models. When you generate a plan using the Portia AI SDK, that plan can be stored in the Portia cloud where it can be highlighted as a preferred plan with a simple thumbs-up. Each “like” tells the Portia planning agent, this was a good plan for this type of user intent—and over time, those signals help planning agents make better decisions on their own. It’s a subtle but powerful shift along the spectrum of autonomy: agents become more capable and self-directed, while still staying grounded in what users actually want.

What does User-Led Learning solve?

We see three areas where the tension between AI autonomy and the predictability users want is at its peak:

- Conversational user experiences: When you interact with an agent, your instructions are usually written in natural language. That’s great for usability, but tough for precision. Human language is full of implications. You might say “send a message,” but really mean “send a WhatsApp message. Or you might expect the agent to summarize the message afterward – even if you never said that out loud. These gaps between what’s said and what’s meant are where agents can go off-course.

- Context specific workflows: A business might want to automate a complex set of steps that are specific to their data collection pipelines for example. This isn’t something an LLM can reason about reliably based on their pre-training data. For example, every business may have their own workflows for completing KYC / KYB processes.

- App specific tool chaining complexity: Some apps require chaining several tools together but the tool descriptions and arguments are not sufficient to ensure the LLM chains them in the right sequence with high reliability across production-scale volumes of agentic workflows. For example, sending an email may require an id which first must be retrieved by mapping to your email address.

So how does it work?

By surfacing the plans you like, you give the LLM guidance about your preferences so it can bias towards them when it encounters user prompts with similar intent. At a high level this process involves the following:

- You can “Like” plans saved to Portia Cloud from the dashboard to signal that they are your “ground truth”.

- You can then pull “Liked” plans based on semantic similarity to the user intent in a query by using our freshly minted portia.storage.get_similar_plans method.

- Finally you can ingest those similar plans as example plans in the Planning agent using the portia.plan method’s example_plans property.

Let’s take a scenario that we’ve written about before – building a refund agent (↗) to process refund requests. This usually results in a relatively complex plan - usually around nine steps broadly covering the following:

- Load the refund policy and customer request from file

- Use LLM smarts to assess the request

- Request human approval

- Process the refund through 3 Stripe interactions – find the customer ID, find the relevant payment for that customer, create the refund.

Without user-led learning: Improving reliability through painstaking prompt engineering

The code blocks in this post are available for you in our examples repository on GitHub (↗). Make sure you have followed the steps in the README to get setup correctly, including minting a Stripe test API key and installing project dependencies.

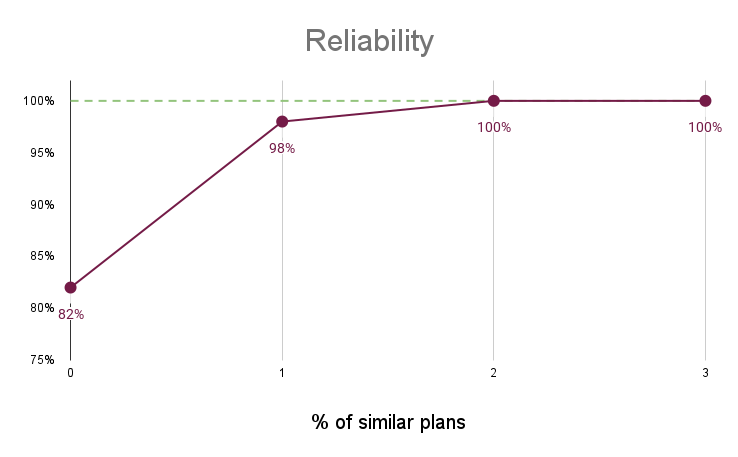

With the kind of multi-step plan we’re looking at here, the quality of your prompt is very important. For example, take the vague_prompt in the code snippet below (accessible in our examples repository on GitHub in the file 01_ull_vague_prompt_no_examples.py (↗)). . We found that such a relatively vague prompt resulted in the correct order of steps only 82% of the time. The LLM would sometimes get mixed up in the ordering of Stripe interactions or omit one of them e.g. it would skip loading payment intents for the Customer. At times it would even skip the critical step of requesting human approval!

from common import init_portia

# Define the prompts for testing

vague_prompt = """

Read the refund request email from the customer and decide if it should be approved or rejected.

If you think the refund request should be approved, check with a human for final approval and then process the refund.

To process the refund, you'll need to find the customer in Stripe and then find their payment intent.

The refund policy can be found in the file: ./refund_policy.txt

The refund request email can be found in "inbox.txt" file

"""

# This is function initializes Portia and all the tools.

# You can find it in common.py

portia_instance = init_portia()

# Generate a plan and print it out.

# 18% of the time, steps will be in the wrong order!

plan = portia_instance.plan(vague_prompt)

print(plan.pretty_print())

We can improve the results of this by spending more time on the prompt, being more prescriptive about what we want to be done and when. Here’s a better prompt we had arrived at after some prompt engineering and eval running, which you can run a few times from 02_ull_good_prompt_no_examples.py (↗) in our examples repository on GitHub:

Read the refund request email from the customer and decide if it should be approved or rejected.

If you think the refund request should be approved, check with a human for final approval and then process the refund.

Stripe instructions -- To create a refund in Stripe, you need to:

* Find the Customer using their email address from the List of Customers in Stripe.

* Find the Payment Intent ID using the Customer from the previous step, from the List of Payment Intents in Stripe.

* Create a refund against the Payment Intent ID.

The refund policy can be found in the file: ./refund_policy.txt

The refund request email can be found in "inbox.txt" file.

The above prompt has better results because it breaks down the steps and lists them in order. This significantly increases reliability – in this case when we tested it, the generated plans were correct 94% of the time – that’s a 12% improvement (percentage points that is 🧐)! But this has two issues. Firstly, there’s still a 6% error rate – not terrible, but not perfect, and secondly, it’s very prescriptive. Instead of giving the agent the autonomy to do the planning for us, we’re pretty much having to program the plan ourselves.

Enter user-led learning: hone in on good plans and let Portia do the rest

With user-led learning, the first thing we did was to optimise how the Planning agent uses example plans. Secondly, we wanted to be able to capture reinforcing signals from end users as they continuously run plans in production, so that the example plans fed back to the Planning agent are reflective of the latest workflows in a particular context. So we introduced the ability to “like” plans in order to signal that those are preferred outcomes. Then you have the ability for the Planning agent to pull the most semantically relevant “liked” plans to use as example plans.

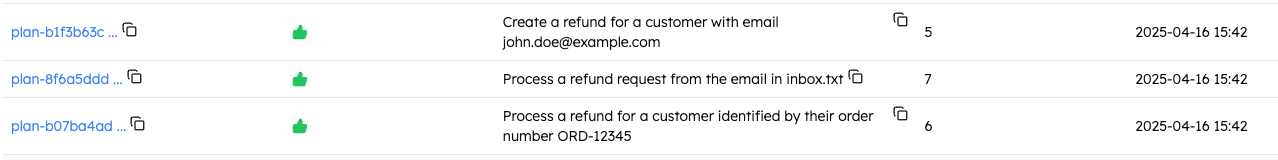

To bring this to life, let’s first simulate the process of a satisfactory Plan being created and run several times. In a real world scenario, you would be using Portia Cloud (i.e. have the PORTIA_API_KEY env variable set, such that the default storage class for all your configs is CLOUD). All plans and plan runs generated would be automatically saved to the cloud and accessible in the dashboard. So outside of this exercise you would just visit the dashboard and “like” your favourite plans as they emerge! For now we’re going to create a plan using the PlanBuilder (↗) and save it to Portia Cloud so we can then like them from the dashboard. Here’s the code (accessible in 04_ull_create_example_plans.py (↗) in our examples repository on GitHub). Notice the subtle prompt (and therefore plan!) differences we're introducing.

from portia.plan import PlanBuilder

from common import init_portia

# Create example plans for refund processing

example_plans = []

# Example 1: Create refund given user email

plan1 = (

PlanBuilder(

"Create a refund for a customer with email john.doe@example.com"

)

.step(

"Find the customer in Stripe by email john.doe@example.com",

"mcp:stripe:list_customers",

"$customer_data",

)

.step(

"Extract customer ID from response",

"extract_customer_id_tool",

"$customer_id",

)

.input("$customer_data")

.step(

"Find payment intents for the customer",

"mcp:stripe:list_payment_intents",

"$payment_intents",

)

.input("$customer_id")

.step(

"Extract payment intent ID from response",

"extract_payment_intent_id_tool",

"$payment_intent_id",

)

.input("$payment_intents")

.step("Create the refund", "mcp:stripe:create_refund", "$refund_result")

.input("$payment_intent_id")

.build()

)

example_plans.append(plan1)

# In the sample code, we add two more example plans here.

portia = init_portia()

for plan in example_plans:

portia.storage.save_plan(plan)

print("""

Plans saved in Portia cloud storage.

Now you should go to the Portia dashboard and 'like' them.""")

Once these plans have been saved, you can go to the Plans page on the Portia dashboard and click on the thumbs up next to the three plans you just created. (They’ll be on the last page of the list of plans.)

The next step is to use the portia.storage.get_similar_plans method to match a user prompt to the preferred plans. Given the semantic similarity between the vague prompt we introduced in this post and the prompts that were used to create our three preferred plans, we expect the get_similar_plans method will retrieve all three of them. Note that this method allows you to play with the similarity threshold and to limit the number of similar plans you want to retrieve as well. To see how this comes together, make sure you have liked the plans created above in the dashboard then run the code below (accessible in 05_ull_vague_with_examples.py (↗) in our examples repository on GitHub).

from common import init_portia

# Define the prompts for testing

vague_prompt = """

Read the refund request email from the customer and decide if it should be approved or rejected.

If you think the refund request should be approved, check with a human for final approval and then process the refund.

To process the refund, you'll need to find the customer in Stripe and then find their payment intent.

The refund policy can be found in the file: ./refund_policy.txt

The refund request email can be found in "inbox.txt" file

"""

portia_instance = init_portia()

example_plans = portia_instance.storage.get_similar_plans(vague_prompt)

if not example_plans:

print(

"No example plans were found in Portia storage. Did you remember to create and 'like' the plans from the previous step?"

)

else:

print(f"{len(example_plans)} similar plans were found.")

plan = portia_instance.plan(

vague_prompt,

example_plans=example_plans,

)

print(plan.pretty_print())

To give you a sense of the reliability gains you can achieve with user-led learning, check out the chart below. Notice that we were able to increase plan reliability from 82% to 98% with just a single example plan and that two were enough to achieve 100% reliability.

In conclusion

User-led learning allows you to bias your Planning agent towards previous plan runs you consider a success. You simply “like” plans you like (doh!) amongst those you have saved in the Portia dashboard. Portia can then fetch the most semantically similar plans to a user prompt and load those as example plans to help steer the Planning agent. Et voilà!

Give this feature a try and let us know how you find it. And as always, please show us some love if you like our content by giving our SDK a star ⭐️ over on Github (↗).