A unified framework for browser and API authentication

The core of the Portia authorization framework is the ability for an agent to pause itself to solicit a user's authorization for an action it wants to perform. With delegated OAuth, we do this by creating an OAuth link that the user clicks on to grant Portia a token that can be used for the API requests made by the agent. We generally like API based agents for reliability reasons – they're fast, predictable and the rise of MCP means integration is getting easier.

However, there are some actions which are not easily accessible by API (my supermarket doesn't have a delegated OAuth flow surprisingly!), and so, there is huge power in being able to switch seamlessly between browser based and API based tasks. The question was, how to do this consistently and securely with our authorization framework.

With OAuth, authorization is done via a token. The protocol for obtaining it has been solidified over many years, but fundamentally if you have access to the token, you have access to the API. With browser based auth, the authentication is fairly baked into the browser itself using cookies or local storage. Then you layer on bot protections – 20 years of sophistication has been built into detecting nefarious bots, which is how an agent looks to a website irrespective of whether the agent is actually doing something useful.

Luckily, the age of agents means various players are rethinking this, and we found a browser infrastructure provider called BrowserBase. Their product allows the creation of browser sessions with a lot of the components that we needed to make this work. Combining BrowserBase with BrowserUse for goal orientated tasks and the Portia framework for authorization means we can offer a very similar paradigm for our developers as with OAuth based tools.

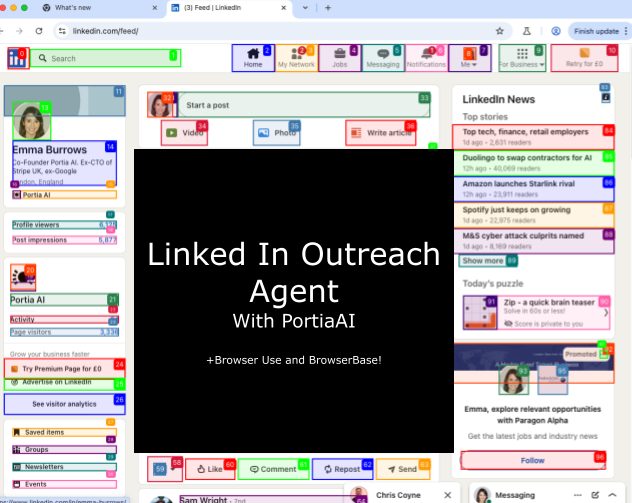

The below shows a quick video of an agent we built using a combination of API and browser based tasks to accomplish LinkedIn outreach.

Tool creation

With Portia, users can add Browser based capabilities to their applications with one line:

tools = PortiaToolRegistry(my_config) + [BrowserToolForUrl("https://www.linkedin.com")]

This indicates to the planner that it can navigate to the LinkedIn website to achieve the overall user goal.

Browser agent

When execution gets to a step requiring the browser, we create a session, either locally or remotely (using BrowserBase). The browser agent then navigates the website to achieve the step task.

Authentication

The browser agent is instructed such that if it encounters authentication, it should return from its task. We then produce an ActionClarification which contains a link for the user to click on to perform the authentication action. If the developer is using our end user concept for scalable auth, the end user has a unique session on BrowserBase and a unique login URL. They can then log-in and the cookies are saved remotely using BrowserBase's secure cookie store.

API or Browser Agents: Who wins?

In the rapidly evolving world of agents, we frequently get asked as to how to think about Browser vs API based agents. Our general rule of thumb is 'use API based tools when available', but here's a quick comparison between the two:

| Browser agents | API agents | |

|---|---|---|

| Speed | Much slower – can require 10-100x the LLM calls vs API based agents | Faster |

| Cost | Much more expensive | Cheaper |

| Reliability | Less predictable in terms of task completion, but more likely to succeed on retry. Possible to get blocked by bot protections | Predictable. Requires more investment to create the tools that can be used in agentic systems. |

| Types of tasks | Exploratory research tasks. Small well defined tasks between API based tasks | Larger tasks, particularly those involving data processing and linking multiple systems together. Use whenever available |

What about those bot protections?

When we first started working on this feature, I assumed that we would nearly always be blocked by bot protections. Thankfully, this turned out not to be the case frequently - on many websites, if you prove that there is genuinely a human in the loop at the point of authentication, your agent can proceed, though often the 2FA or multi factor checks that the human needs to do are harder than if you are browsing the web as a human. Some websites still have more fundamental infrastructure blocks but the approach that many seem to be taking of authorizing as long as it's genuinely on behalf of a human feels balanced and appropriate.