Build a refund agent with Portia AI and Stripe's MCP server

Anthropic open sourced its Model Context Protocol (↗), or MCP for short, at the end of last year. The protocol is picking up steam as the go-to way to standardise the interface between agent frameworks and apps / data sources, with the list of official MCP server implementations (↗) growing rapidly. Our early users have already asked for an easy way to expose tools from an MCP server to a Portia client so we just released support for MCP servers in our SDK ⭐️.

In this blog post we show how you can combine the power of Portia AI’s abstractions with any tool set from an MCP server to create unique agent workflows. The example we go over is accessible in our agent examples repository here (↗).

Connect to MCP servers with the Portia SDK

Connecting to an MCP server allows you to load all tools from that server into a ToolRegistry subclass called an McpToolRegistry, which you can then combine with any other tools you offer to Portia’s planning and execution agents.

We allow developers to load tools from MCP servers into an McpToolRegistry using the two commonly available methods today (to find out more about these options, see the **official MCP docs **):

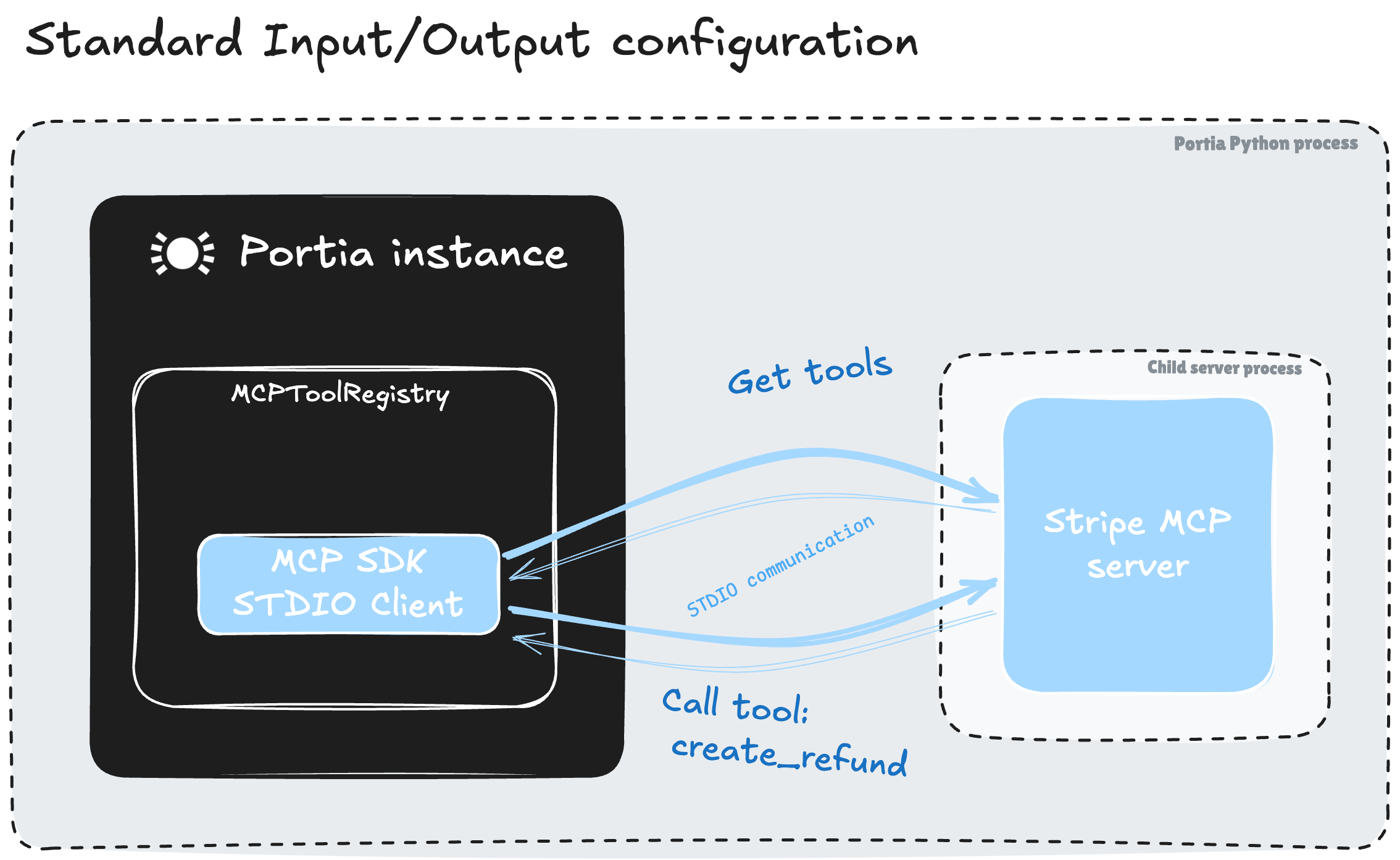

- stdio (Standard Input / Output): The server kicks off as a subprocess of the python process where your Portia client is running. Portia’s SDK only requires you to provide a server name and command with args to spin up the subprocess, giving you the flexibility to integrate any server written in any language using any execution mechanism. This method is useful for local prototyping e.g. you can load a local MCP server repo, kick off a process and interact with its tools in no time.

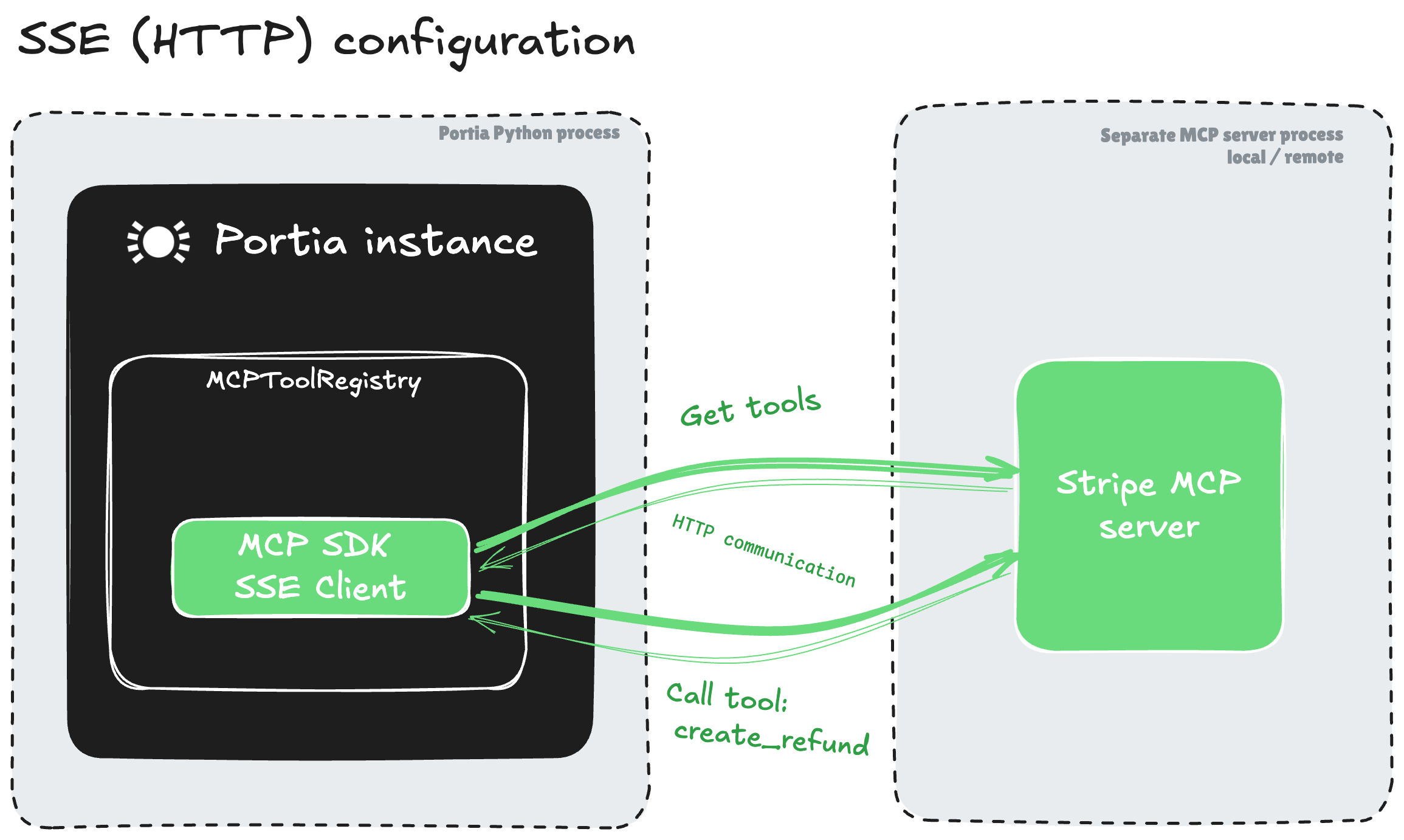

- sse (Server-Sent Events): The server is accessible over HTTP. This could be a locally or remotely deployed server. We just need to specify the current server name and URL for the Portia SDK to interact with it.

Since this post, MCP have standardised around Streamable HTTP (↗), rather than SSE, for remote connections. We now support both methods in our SDK (↗).

- Standard Input / Output

- Server-Sent Events

In our Stripe example, once you provide the NPX command Portia’s SDK takes over and manages everything for you:

- We will spin up the Stripe agent toolkit MCP (↗) server locally using the NPX command args

- Our built-in MCP client, which uses the official MCP python SDK (↗) under the hood, will query the Stripe MCP server to understand what tools it provides, and make these available to your Planner and Execution Agents.

We can then extract the tools and automatically convert them to Portia Tool (↗) objects using a stdio connection:

stripe_mcp_registry = McpToolRegistry.from_stdio_connection(

server_name="stripe",

command="npx",

args=[

"-y",

"@stripe/mcp",

"--tools=all",

f"--api-key={os.environ['STRIPE_API_KEY']}",

],

)

Use a clarification to loop in a human

Most use cases we’ve seen out there don't leverage the refund tool from Stripe MCP server because it is a high risk use case for an agent to act on. With Portia’s clarifications, we can ensure the agent pauses the plan run and solicits human approval before a plan run happens.

During agentic workflows, there may be tasks where your organisation's policies require explicit approvals from specific people e.g. allowing bank transfers over a certain amount. Clarifications allow you to define these conditions so the agent running a particular step knows when to pause the plan run and solicit input in line with your policies. When Portia encounters a clarification and pauses a plan run, it serialises and saves the latest plan run state. Once the clarification is resolved, the obtained human input captured during clarification handling is added to the plan run state and the agent can resume step execution.

For more on clarifications, visit our docs (↗).

For our refund example, we want the following to happen:

- Load a refund policy document and check the transaction details against it.

- Reject the request if not within the policy and fail the plan run

- Else make a recommendation to approve, along with rationale

→ The RefundReviewerTool offers this functionality

- Before calling the

create_refundtool, check with a human for approval.- Proceed with the plan run if the human approves it

- Otherwise exit the plan run with an error

→ The clarify_on_tool_calls as a before_tool_call execution hook offers this functionality

Previously in this example, we used a custom tool definition to prompt for human approval. While this approach is still supported, we've since added Execution hooks to the Portia SDK (↗), providing a much more natural way to achieve the same outcome with less code!

- If the plan run passes the previous step successfully, create a refund using the appropriate tool loaded from Stripe’s MCP server.

Bringing it all together

With Portia AI, you don’t need to create individual agents and point them explicitly at each step in the above process or at the required tools. Our planning agent will do exactly that for you. All you need to do is to prompt it using the Plan method (the more detailed the prompt, the more reliable it will be) and share a superset of tools with it. In the refund_agent.py code, we demonstrate the power of our planning agent by passing our Portia client all the tools from the Stripe MCP tool registry, the local tool described above (RefundReviewerTool), the clarify_on_tool_calls execution hook, and Portia’s catalogue of cloud tools (DefaultToolRegistry).

portia = Portia(

config=config,

tools=(

stripe_mcp_registry

+ InMemoryToolRegistry.from_local_tools(

[

RefundReviewerTool(),

]

)

+ DefaultRegistry(config)

),

execution_hooks=CLIExecutionHooks(

before_tool_call=clarify_on_tool_calls("mcp:stripe:create_refund")

)

)

plan = portia.plan(

f"""

Read the refund request email from the customer and decide if it should be approved or rejected.

If you think the refund request should be approved, check with a human for final approval and then process the refund.

Stripe instructions:

* Customers can be found in Stripe using their email address.

* The payment can be found against the Customer.

* Refunds can be processed by creating a refund against the payment.

The refund policy can be found in the file: ./refund_policy.txt

The refund request email is as follows:

{customer_email}

"""

)

Here you have the option of implementing an end user feedback loop to refine your plan before running it. We demonstrate this with the scheduling agent example here (↗). You also have the option of providing example plans to the Portia planning agent for added reliability e.g. if you want this refund process to always follow the same set of steps. The above code will produce a plan in line with the instructions we outlined in the prompt and include the relevant tools automatically. Below is an abridged version:

[

{

"task": "Read the refund policy from the file to understand the conditions for a valid refund.",

"tool_id": "file_reader_tool"

},

{

"task": "Review the refund request email against the refund policy to decide if the refund should be approved or rejected.",

"tool_id": "refund_reviewer_tool"

},

{

"task": "If the refund was approved, locate the customer in Stripe using their email address.",

"tool_id": "mcp:stripe:list_customers"

},

{

"task": "Retrieve the payment intent associated with the found customer to identify the payment to refund.",

"tool_id": "mcp:stripe:list_payment_intents"

},

{

"task": "Process the refund by creating a refund for the identified payment intent.",

"tool_id": "mcp:stripe:create_refund"

}

]

And finally running this plan will allow you to test how it all comes together. Here’s a snazzy snappify animation of the PlanRunState across all steps of the plan run. Note how a clarification is raised and then approved by a human before the refund creation agent executes the final step.

Our reflections on working with MCP servers

Here's what we learned experimenting with MCP servers so far:

- Most MCP servers are making use of the tool primitives, but not of prompts or resources, which are also supported in the MCP spec. It is not clear what a best in class implementation of those would be.

- The power of a standardised protocol is real! In our Stripe refund agent example we seamlessly integrate Stripe tools provided by their Javascript MCP server into a Python Portia Agent.

- Provided the app owner who publishes an MCP server is maintaining it, MCP servers can be a powerful and pain-free way of discovering and loading tools into your AI app.

- The limitation is that you are beholden to the MCP server owner’s tool definition, and those can vary in quality (e.g. tool and / or args description does not offer enough guidance for an LLM to invoke the tool reliably at scale). This can be a particular problem with community provided servers, so make sure you check out the quality of the tool definitions.

- The MCP specification does not include output schema for tools, and many MCP tool descriptions do not describe exactly what the tool returns. This can create challenges for the Agent using the tool.

- We need an MCP discovery service that allows an LLM to discover MCP servers from an app owner and load the details to connect to them (an MCP DNS server if you’re into acronym salads 🥗). The MCP folks have a registry concept (↗) in the works to address this issue.

- Tool auth is a challenge for many people looking to deploy Agents in the real world - we’re well aware of that (↗). MCP servers today are generally run locally with credentials such as API keys provided at start-up. Again, the MCP specification is moving quickly and a draft for Auth support has been published (↗).