Building agents with Controlled Autonomy using our new PlanBuilder interface

Balancing autonomy and reliability is a key challenge faced by teams building agents (and getting it right is notoriously difficult! (↗)). At Portia, we’ve built many production-ready agents with our design partners and today we’re excited to share our solution: Controlled Autonomy. Controlled autonomy is the ability to control the level of autonomy of an agent at each step of an agentic plan. We implement this using our newly reshaped PlanBuilder interface to build agentic systems, and today we’re excited to be releasing it into our open-source SDK. We believe it’s a simple, elegant interface (without the boilerplate of many agentic frameworks) that is the best way to create powerful and reliable agentic systems - we can’t wait to see what you build with it!

If you’re building agents, we’d love to hear from you! Check out our open-source SDK and let us know what you’re building on Discord. We also love to see people getting involved with contributions in the repo - if you’d like to get started with this, check out our open issues and let us know if you’d like to take one on.

Straight into an example

Our PlanBuilder interface is designed to feel intuitive and we find agents built with it are easy to follow, so let’s dive straight into an example:

from portia import PlanBuilderV2, StepOutput

plan = (

PlanBuilderV2("Run this plan to process a refund request.")

.input(name="refund_info", description="Info of the customer refund request")

.invoke_tool_step(

step_name="read_refund_policy",

tool="file_reader_tool",

args={"filename": "./refund_policy.txt"},

)

.single_tool_agent_step(

step_name="read_refund_request",

task=f"Find the refund request email from {Input('customer_email_address')}",

tool="portia:google:gmail:search_email",

)

.llm_step(

step_name="llm_refund_review",

task="Review the refund request against the refund policy. "

"Decide if the refund should be approved or rejected. "

"Return the decision in the format: 'APPROVED' or 'REJECTED'.",

inputs=[StepOutput("read_refund_policy"), StepOutput("read_refund_request")],

output_schema=RefundDecision,

)

.function_step(

function=record_refund_decision,

args={"refund_decision": StepOutput("llm_refund_review")})

.react_agent_step(

task="Find the payment that the customer would like refunded.",

tools=["portia:mcp:mcp.stripe.com:list_customers", "portia:mcp:mcp.stripe.com:list_payment_intents"],

inputs=[StepOutput("read_refund_request")],

)

# Full example includes more steps to actually process the refund etc.

.build()

)

The above is a modified extract from our Stripe refund agent (full example here (↗)), setting up an agent that acts as follows:

- Read in our company’s refund policy: this uses a simple

invoke_tool_step, which means that the tool is directly invoked with the args specified with no LLM involvement. These steps are great when you need to use a tool (often to retrieve data) but don’t need the flexibility of an LLM to call the tool because the args you want to use are fixed (this generally makes them very fast too!). - Read in the refund request from an email: for this step, we want to flexibly find the email in the inbox based on the refund info that is passed into the agent. To do this, we use a

single_tool_agent, which is an LLM that calls a single tool once in order to achieve its task. In this case, the agent creates the inbox search query based on the refund info passed in to find the refund email. - Judge the refund request against the refund policy: the

llm_stepis relatively self-explanatory here - it uses your configured LLM to judge whether we should provide the refund based on the request and the policy. We use theStepOutputobject to feed in the results from the previous steps, and theoutput_schemafield allows us to return the decision as a pydantic (↗) object rather than as text. - Record the refund decision: we have a python function we use to record the decisions made - we can call this easily with a

function_stepwhich allows directly calling python functions as part of the plan run. - Find the payment in Stripe: finding a payment in Stripe requires using several tools from Stripe’s remote MCP server (which is easily enabled in your Portia account (↗)). Therefore, we set up a ReAct (↗) agent with the required tools and it can intelligently chain the required Stripe tools together in order to find the payment. As a bonus, Portia uses MCP Auth by default so these tool calls will be fully authenticated.

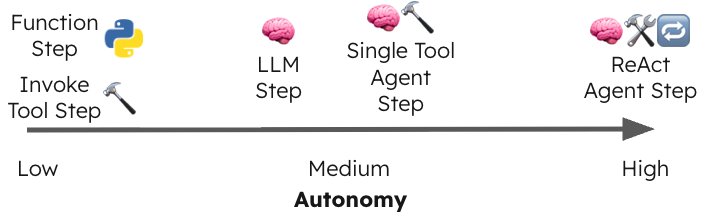

Controlled Autonomy

As demonstrated in the above example, the power of PlanBuilderV2 comes from the fact you can easily connect and combine different step types, depending on your situation and requirements. This allows you to control the amount of autonomy your system has at each point in its execution, with some steps (e.g. react_agent_step) making use of language models with high autonomy while others are carefully controlled and constrained (e.g. invoke_tool_step).

From our experience, it is this ‘controlled autonomy’ that is the key to getting agents to execute reliably, which allows us to move from exciting prototypes into real, production agents. Often, prototypes are built with ‘full autonomy’, giving something like a ReAct agent access to all tools and letting it loose on a task. This approach is possible with our plan builder and can work well in some situations, but in other situations (particularly for more complex tasks) it can lead to agents that are unreliable. We’ve found that tasks often need to be broken down and structured into manageable sub-tasks, with the autonomy for each sub-task controlled, for them to be done reliably. For example, we often see research and retrieval steps in a system being done with high autonomy ReAct agent steps because they generally use read-only tools that don’t affect other systems. Then, when it comes to the agent taking actions, these steps are done with zero or low autonomy so they can be done in a more controlled manner.

Simple Control structures

Extending the above example, our PlanBuilderV2 also provides familiar control structures that you can use when breaking down tasks for your agentic system. This gives you full control to ensure that the task is approached in a reliable way:

# Conditional steps (if, else if, else)

.if_(condition=lambda review: review.decision == REJECTED,

args={"llm_review_decision": StepOutput("llm_refund_review")})

.function_step(

function=handle_rejected_refund,

args={"proposed_refund": StepOutput("proposed_refund")})

.endif()

# Loops - here we use .loop(over=...), but there are also alternatives for

# .loop(while=...) and .loop(do_while=...)

.loop(over=StepOutput("Items"), step_name="Loop")

.function_step(

function=lambda item: print(item),

args={"item": StepOutput("Loop")})

.end_loop()

We went with .if_() rather than .if() (note the underscore) because if is a restricted keyword in python

Human - Agent interface

Another aspect that is vital towards getting an agent into production is the ability to seamlessly pass control between agents and humans. While we build trust in agentic systems, there are often key steps that require verification or input from humans. Our PlanBuilder interface allows both to be handled easily, using Portia’s clarification system (↗):

# Ensure a human approves any refunds our agent gives out

builder.user_verify(

message=f"Are you happy to proceed with the following proposed refund: {StepOutput('proposed_refund')}?")

# Allow your end user to provide input into how the agent runs

builder.user_input(

message="How would you like your refund?",

options=["Return to purchase card", "gift card"],

)

Controlling your agent with code

The function_step demonstrated earlier is a key addition to PlanBuilderV2. In many agentic systems, all tool and function calls go through a language model, which can be slow and also can reduce reliability. With function_step, the function is called with the provided args at that point in the chain with full reliability. We’ve seen several use-case for this:

- Guardrails: where deterministic, reliable code checks are used to verify agent behaviour (see example below)

- Data manipulation: when you want to do a simple data transformation in order to link tools together, but you don’t want to pay the latency penalty of an extra LLM call to do the transformation, you can instead do the transformation in code.

- Plug in existing functions: when you’ve already got the functionality you need in code, you can use a function_step to easily plug that into your agent.

# Add a guardrail to prevent our agent giving our large refunds

builder.function_step(

step_name="reject_payments_above_limit",

function=reject_payments_above_limit,

args={"proposed_refund": StepOutput("proposed_refund"), "limit": Input("payment_limit")})

What’s next?

We’ve really enjoyed building agents with PlanBuilderV2 and are excited to share it more widely. We find that it complements our planning agent nicely: our planning agent can be used to dynamically create plans from natural language when that is needed for your use-case, while the plan builder can be used if you want to more carefully control the steps your agentic system takes with code.

We’ve also got more features coming up over the next few weeks that will continue to make the plan builder interface even more powerful:

- Parallelism: run steps in parallel with

.parallel(). - Automatic caching: add

cache=Trueto steps to automatically cache results - this is a game-changer when you want to iterate on later steps in a plan without having to fully re-run the plan. - Step error handler: specify

.on_error()after a step to attach an error handler to it,.retry()to allow retries of steps or useexit_step()to gracefully exit a plan. - Linked plans: link plans together by referring to outputs from previous plan runs.

plan = (

PlanBuilderV2("Run this plan to process a refund request.")

# 1. Run subsequent steps in parallel

.parallel()

.invoke_tool_step(

tool="file_reader_tool",

args={"filename": "./refund_policy.txt"},

# 2. Add automatic caching to a step

cache=True

)

# 3. Add error handling to a step

.on_error()

.react_agent_step(

# 4. Link plans together by referring to outputs from a previous run

# Here, we could have a previous agent that determines which customer refunds to process

task=f"Read the refund request from my inbox from {PlanRunOutput(previous_run)}.",

tools=["portia:google:gmail:search_email"],

)

# Resume series execution

.series()

)

So give our new PlanBuilder a try and let us know how you get on - we can’t wait to see what you build! 🚀

For more details on PlanBuilderV2, check out our docs (↗), our example plan (↗) or the full stripe refund example (↗). You can also join our Discord (↗) to hear future updates.